Miguel A. Cabrera Minagorri

2024-02-14

YOLO-World: Open vocabulary object detection. No more model training

When you typically work with computer vision you have to train a model on a dataset that is specific to your use case. Until now. Recently Tencent’s AI lab announced YOLO-World, a real-time open vocabulary object detector model for AI vision. You can find the paper here.

YOLO World provides real-time object detection based on open vocabulary. This means that you can tell the model what it should detect and it will do it without specific training. You simply provide the model with a prompt, just like you do with ChatGPT and LLMs.

You may be thinking, why is this so important? Well, it allows shipping vision-based software significantly faster. Typically, computer vision models are trained to identify a specific set of classes (objects) which makes them suitable only for the use case they were trained for. With open vocabulary object detectors, you can use a single model for any application, saving a huge amount of engineering hours that you had to dedicate to collecting images for your dataset, labeling those images, and training and adjusting the model.

Does that mean that model training will disappear? Well, we think fine-tuning will continue to be required when you need to ensure a certain level of accuracy, but in the long term, it is feasible that this kind of model provides a high enough accuracy that you do not need to train a model ever again.

YOLO-World Performace review

YOLO-World provides three models:

- small with 13M (re-parametrized 77M) parameters

- medium with 29M (re-parametrized 92M) parameters

- large with 48M (re-parametrized 110M) parameters

The YOLO-World team benchmarked the model using the LVIS dataset and reported 35.4 AP with 52 FPS for the large version and 26.2 AP with 74.1 FPS for the small version on a V100 GPU. We think it would be very interesting to obtain a benchmark on less powerful GPUs that are more accessible to the general public.

Getting started with YOLO-World

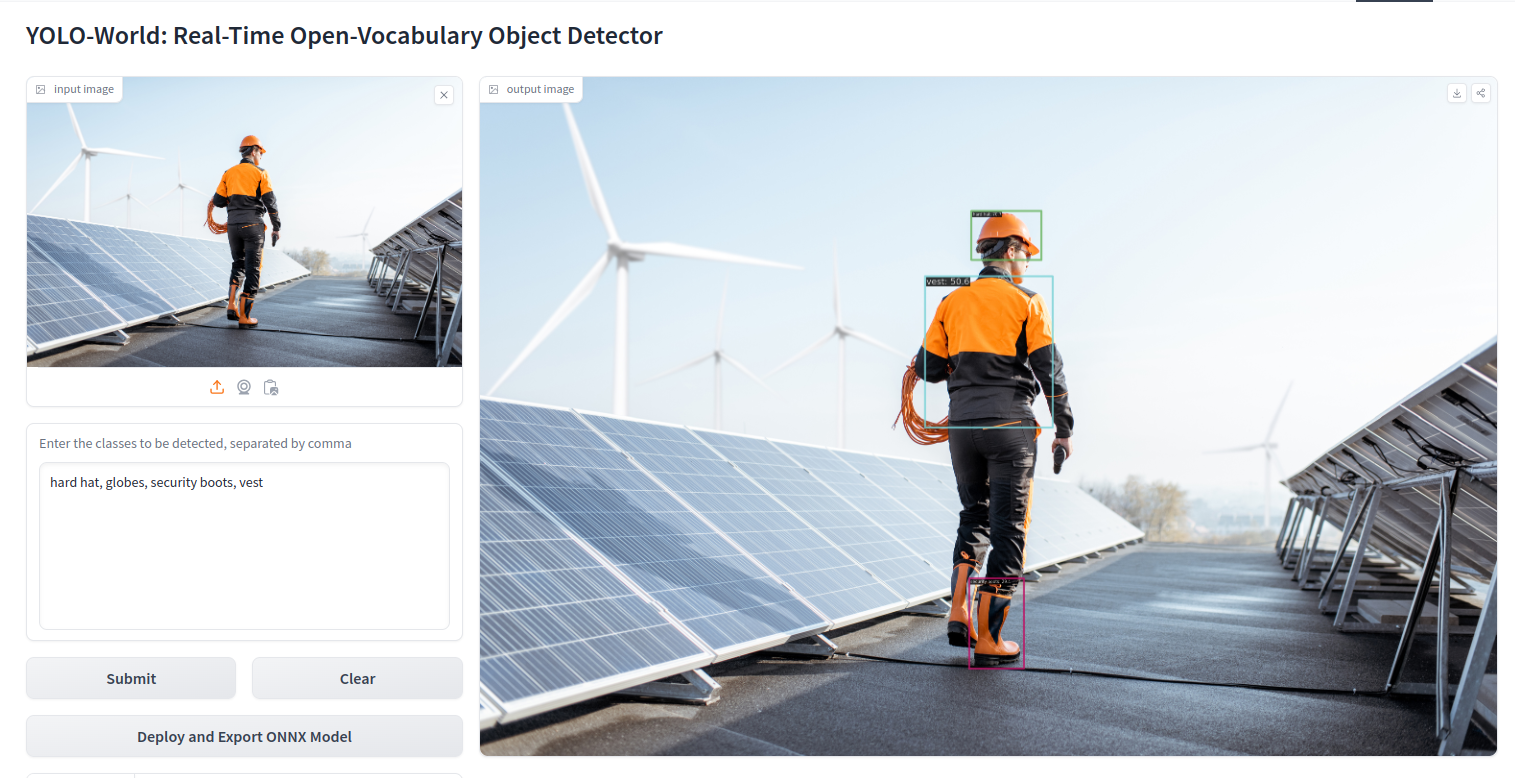

So, how do you get started to test YOLO-World? You can simply go to the HuggingFace page , upload a picture, and write in the text input what you want to identify.

As you can see in the following example, we made a test for PPE (Personal Protective Equipment) detection and it was able to correctly identify it.

You can find more about it on their YOLO-World Github

Conclusions

YOLO-World seems to be a huge step in single-shot object detectors, also, it provides great performance and speed, allowing to deploy it within edge devices.

We are very excited about the possibilities unlocked by open vocabulary object detectors and to create the first Pipeless examples with them. We think combining Pipeless with open vocabulary object detectors is key to creating AI vision applications in minutes.

Subscribe to our newsletter!

Receive new tutorials, community highlights, release notes, and more!